The sovereign AI conversation usually ends the same way: "We need our own infrastructure."

Then reality hits. Data centers cost hundreds of millions. They take years to build. Most organizations don't have the operational expertise to run them.

This framing is wrong. Sovereign AI doesn't require owning data centers. It requires controlling where your workloads run.

The infrastructure trap

When enterprises face data residency requirements, the instinct is to build or buy.

Option 1: Build your own. $500M+ capital. 3-5 years to deploy. Massive operational complexity. For most organizations, this isn't realistic.

Option 2: Colocate locally. Better, but still requires significant upfront investment, hardware procurement, and ongoing management. You're running a mini-cloud.

Option 3: Wait for hyperscalers. AWS, Google, and Microsoft are building sovereign cloud regions, but slowly and at premium pricing. France's Secnumcloud requirements still exclude most hyperscaler offerings for public sector work.

All three options assume you need to own infrastructure. For AI inference specifically, you don't.

Inference routing as an alternative

AI inference is stateless. Unlike databases that need persistent local storage, inference requests are fire-and-forget. The model processes your prompt and returns a response. Nothing persists after the request completes.

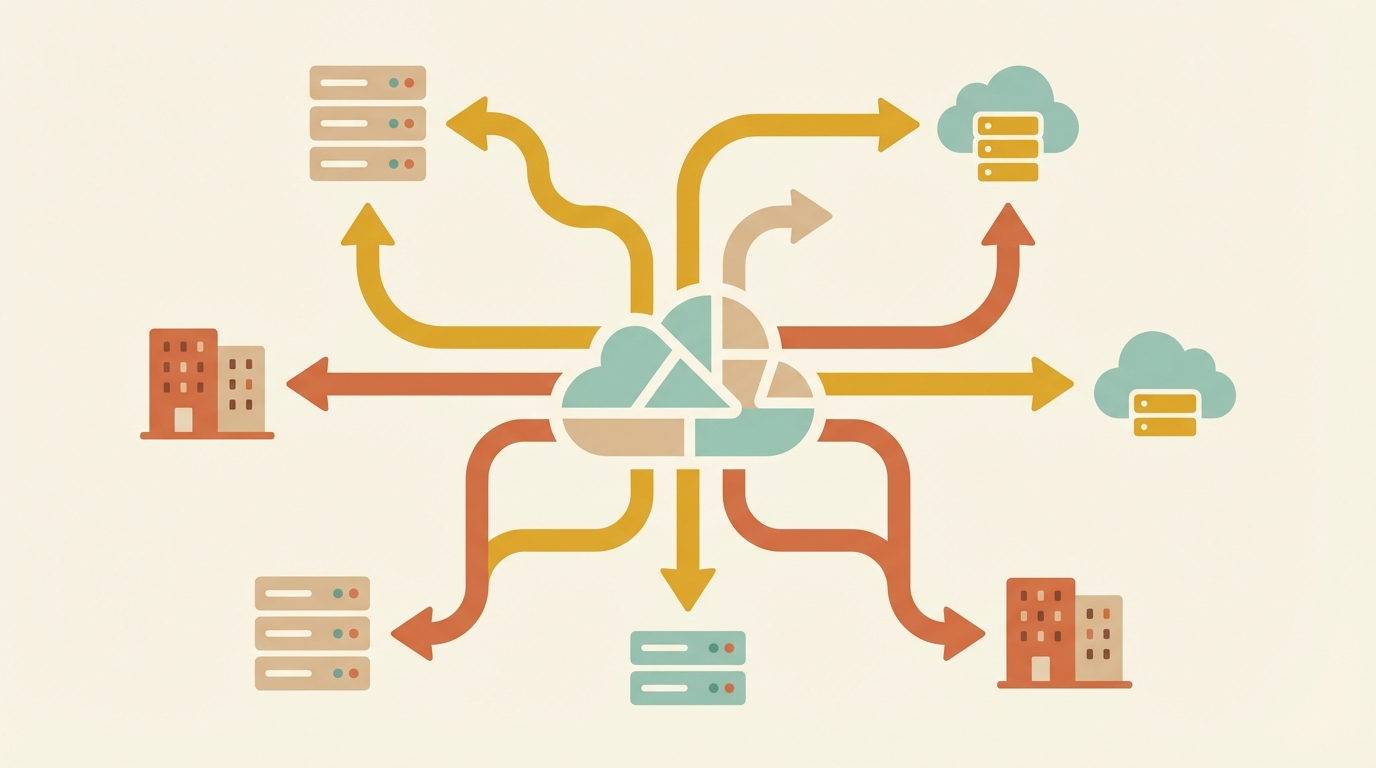

This means inference workloads can route dynamically to wherever compliant compute exists, without you building or owning that compute.

The architecture:

Your Application

↓

Inference Router (compliance-aware)

↓

┌─────────────────────────────────────┐

│ Brazil GPU Cluster │ India DC │

│ EU Sovereign Zone │ APAC Node │

└─────────────────────────────────────┘

Your application makes one API call. The router decides where to run it based on:

- User jurisdiction (from request metadata)

- Data classification (PII, financial, healthcare)

- Compliance requirements (LGPD, DPDP, GDPR)

- Model availability

- Cost and latency

The request routes to compliant infrastructure automatically. Your code doesn't change.

What this gets you

Instant global compliance

Adding a new market doesn't require building infrastructure. If compliant compute exists there through any provider, you can route to it immediately.

When Indonesia tightens localization requirements, you don't scramble for hardware. You update routing rules.

Cost optimization within compliance bounds

Not all compliant compute costs the same. GPU pricing varies across providers and regions. Smart routing can optimize for cost while respecting compliance boundaries.

A request from an EU user might route to the cheapest GDPR-compliant cluster available right now. Your application doesn't know or care.

Resilience without redundancy

Traditional compliance approaches need redundant infrastructure in each jurisdiction. With inference routing, resilience comes from the provider network.

One Brazil-compliant cluster goes down, traffic routes to another. Your SLA doesn't depend on any single facility.

Incremental adoption

You don't have to migrate everything at once. Start by routing compliance-sensitive workloads through the compliant path. Keep other workloads on existing infrastructure.

As requirements tighten or you enter new markets, expand routing rules. No forklift migrations.

The network effect

Inference routing gets better as the provider network grows. Each new GPU cluster in a new jurisdiction expands what's possible.

This creates a flywheel:

- Enterprise demand for compliant inference grows

- GPU providers in emerging markets see opportunity

- More compliant compute comes online

- Routing becomes more effective and cheaper

- Demand grows further

This is already happening. IndiaAI Mission is deploying 10,000+ GPUs. Brazil is expanding domestic compute. Indonesia and Malaysia are attracting data center investment specifically for sovereignty requirements.

The infrastructure is being built. The question is whether you can access it efficiently.

What to look for in an inference router

Jurisdictional awareness

Can it determine request jurisdiction from IP, user metadata, or explicit flags? Can it apply different routing rules based on that?

Compliance certification

What certifications does the underlying compute hold? SOC 2 is baseline. For specific markets, you need LGPD, DPDP, or equivalent local certifications.

Audit trail

Compliance isn't just about where data goes. It's about proving where data went. You need detailed logs of which infrastructure processed each request.

Model availability

Compliance doesn't help if the models you need aren't available on compliant infrastructure. Verify that your required models work through compliant routes.

OpenAI API compatibility

The easiest migration is a drop-in replacement. If the router is OpenAI-compatible, you swap the base URL and you're running on compliant infrastructure.

Bottom line

Sovereign AI is a requirement. Data residency laws are tightening. Market access depends on compliance.

But sovereignty doesn't require owning infrastructure. It requires controlling where workloads run.

Inference routing gives you that control without capital expense, operational burden, or multi-year timelines.

The sovereign AI future is here. The question isn't "do we build it ourselves?" It's "how do we route to it?"